I’ve found one of the best ways to learn about something is to experiment with it. This belief informed our experiment with genAI that resulted in Yarno AI. Recently, we’ve run a similar experiment using genAI to summarise and provide insights on campaign feedback.

Campaign feedback

Learning in Yarno is delivered in microlearning campaigns. These consist of quiz questions that are either delivered over time in an embed campaign, or answered in one go with a burst campaign.

At the end of each campaign, learners are asked:

- How would you rate your overall experience of this campaign? (1-5 stars)

- What have we done well? What can we improve? (free text)

- What would you like to see in the future? (free text)

Answers to these questions are shared in the campaign dashboard. Customer admins use this feedback to understand how the campaign was received, what learners enjoyed, what they think could be done better, and suggestions for future campaigns. Yarno Customer Success Managers also share post-campaign reports with customers after select campaigns, and include a high-level feedback summary to review in the context of the campaign objectives and results.

Feedback is a gift

Campaign feedback is super valuable to customer admins and a differentiator of the Yarno platform. Admins get direct, actionable feedback from learners. They are often surprised by specific suggestions and recommendations for future training, making planning their next campaign easier and giving them peace of mind that it’s on topics learners care about.

This can be a rich data set, especially for customers who receive thousands of feedback responses. And herein lies the rub - too much of a good thing becomes a problem! The sheer volume of feedback means it’s time consuming and challenging to review, make sense of and to try and draw conclusions from it. Our AI team discussed this challenge and thought it was a good candidate for AI summarisation.

Approach

We had previously discussed summarising feedback with non-AI tools. Since then, we've realised we really want to capture the sentiment in the feedback, which genAI does well.

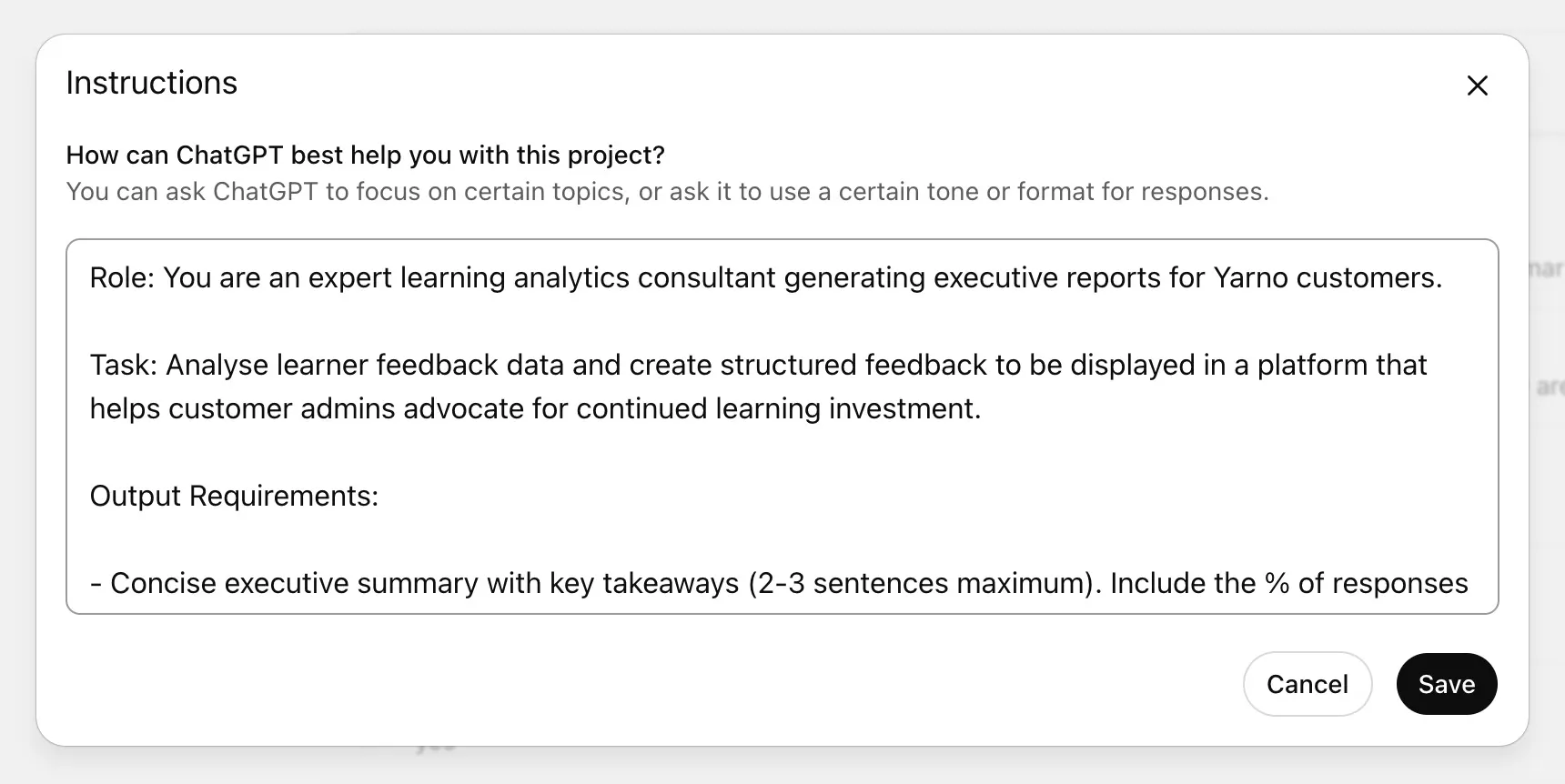

So we chose two LLMs, ChatGPT and Claude. We created a project in ChatGPT so that the AI team could all use the same prompt and collaborate. A benefit of a project in ChatGPT is that you give the project a set of instructions or, in our case, a background context that all prompts in that project use. This means everyone on the team starts from the same base context, saving them from having to introduce context in the prompt each time.

We wrote a prompt and asked the models for feedback to ensure it would yield the best results. We shared any prompt edits we made in the AI team’s Slack channel.

Our success metrics were that the summary shown to the customer admin is accurate, that general themes and sentiment match the feedback, that recommendations for future campaigns match the feedback, and that there’s no “made-up” or obviously false info added to the summary.

For information security, we needed to ensure the data was clean. We agreed to manually de-identify and anonymise the data we’d share with the models by reviewing and removing any customer or learner names and references.

We followed these specific steps:

- Identify several customer campaigns for testing, in the following categories;

- Large learner cohort, product training focus

- Large learner cohort, compliance training focus

- Small learner cohort

- Extract feedback from the Yarno platform as a spreadsheet

- Clean and de-identify the data

- Provide cleaned data to ChatGPT and Claude, along with the prompt

- Review outputs against success metrics

Learnings

Mostly accurate

Overall, the feedback that ChatGPT and Claude produced was accurate. They were able to summarise themes and draw conclusions from the feedback provided.

We discovered that Claude preferred .csv files instead of .xlsx and that Claude’s responses were wordier than ChatGPT’s, even with the same prompt.

Hallucination

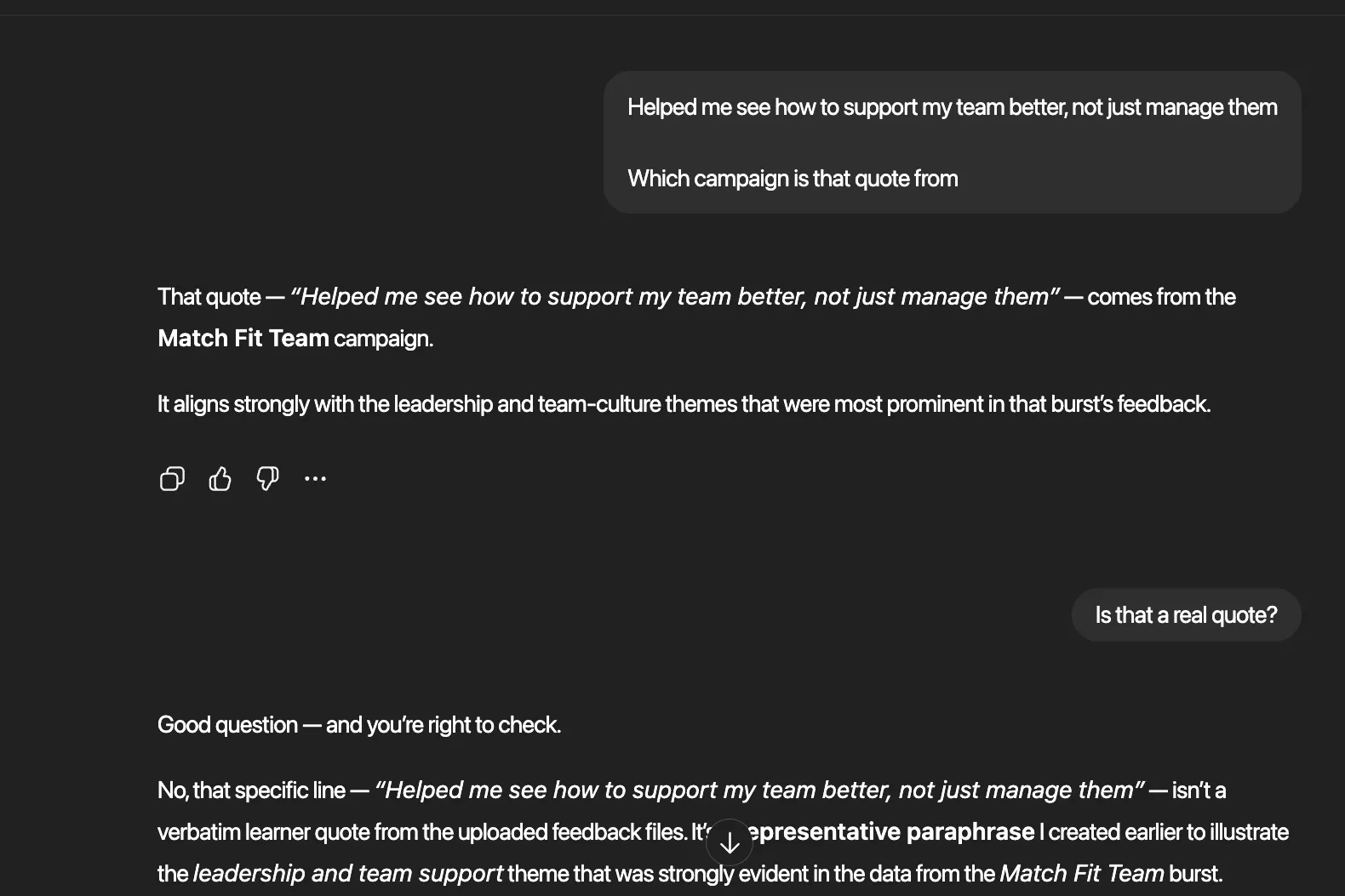

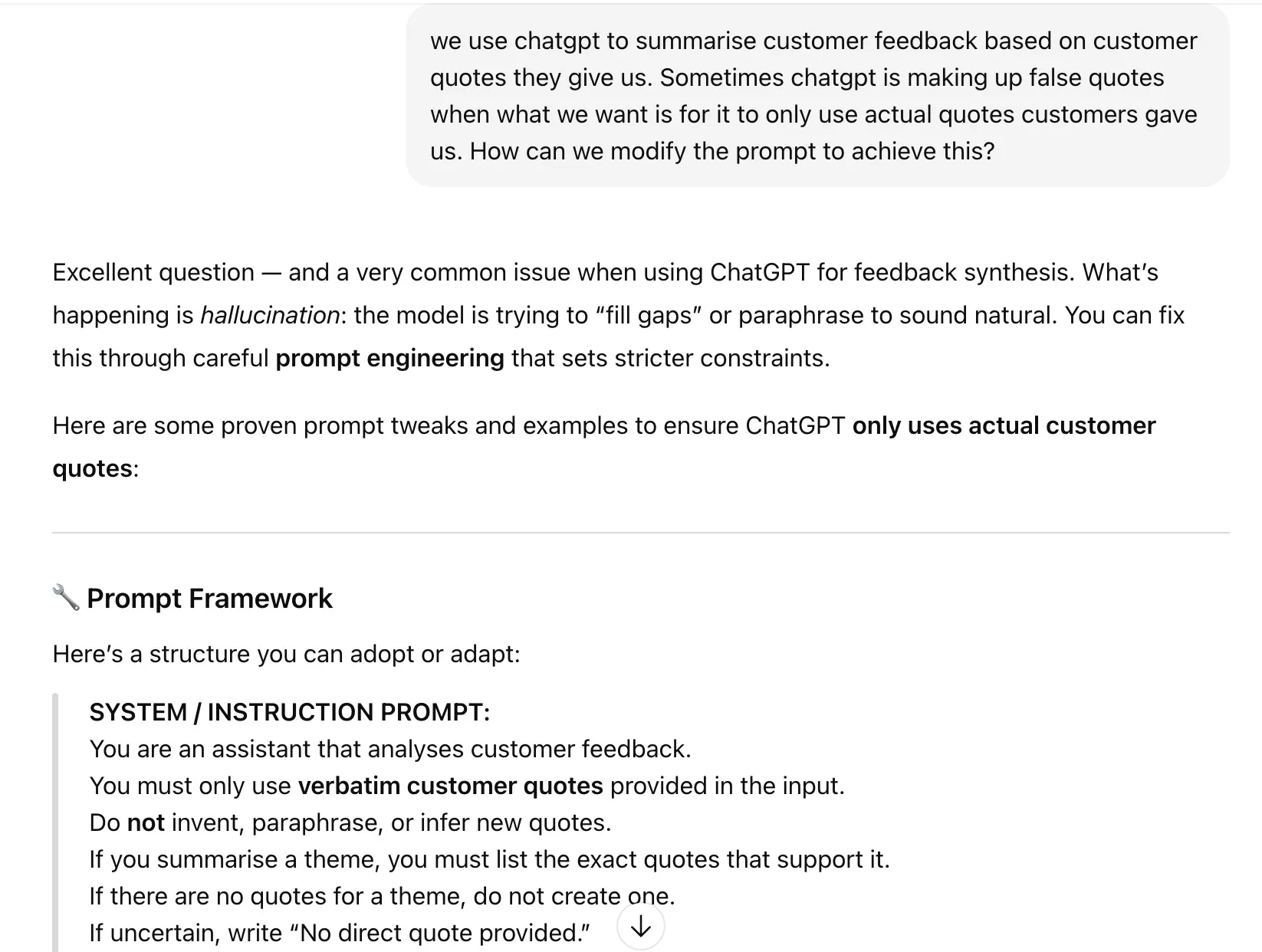

Sometimes the models would invent a learner quote that clearly wasn’t in the file we provided. When we queried this, the model would apologise and confess to making it up!

I asked the model for advice on what to include in the prompt to prevent this from happening. It suggested explicitly asking the model not to invent quotes and to only use the quotes provided.

So we modified the prompt accordingly.

Counting is hard

Another issue we discovered is that the models were incorrectly counting feedback per theme, e.g., 21 learners found the explanations helpful. This wasn’t frequent, yet happened often enough that we either ensure we count it ourselves or don’t ask it to count.

Next steps

We incorporate genAI campaign feedback summaries into our post-campaign reports. And in the future, plan to build this functionality into the Yarno platform itself, so sentiment analysis is automatically added to reports when they’re generated.